Resilience in today’s liquid business environment demands flexibility. The term “observability” replaces monitoring, reflecting the need to adapt and be agile in the face of challenges. The key is to dissolve operations into the cloud, integrating tools and operational expertise for effective resilience.

I remember that when I started my professional career (in a bank) one of the first tasks I was handled was to secure an email server exposed to the internet. Conversations around coffee were about these new trends that seemed suicidal, they wanted to take away service exploitation to servers on the internet!

There wasn’t even talk of the cloud at that time. The first steps of software as a service were already being taken, but in short, everything was on-premise, and infrastructure was the queen of computing, because without a data center, there was no business.

Two decades have gone by and the same thing that happened to Mainframes has happened to data centers. They are reminiscences of the past, something necessary, but outside of our lives. No one builds business continuity around the concept of the data center anymore, who cares about data centers anymore?

The number of devices worldwide that are connected to each other to collect and analyze data and perform tasks autonomously is projected to nearly triple, from 7 billion in 2020 to over 29.4 billion in 2030.

How many of those devices are located in a known data center?, furthermore, does it really matter where these devices are?

We don’t know who they are, who they are maintained by, or even what country they are in many times, no matter how much data protection laws insist, technology evolves a lot faster than legislation.

The most important challenge is ensuring business continuity, and that task is at the very least difficult when it is increasingly harder to know how to manage a business’ critical infrastructure, because the concept of infrastructure itself is changing.

Content:

What does IT infrastructure mean?

The suite of applications that manages the data needed to run your business. Below those applications is “everything else”, from databases, engines, libraries, full technology stacks, operating systems, hardware and hundreds of people in charge of each piece of that great babel tower.

What does business continuity mean?

According to ISO 22301, business continuity is defined as the “ability of an organization to continue the delivery of products and services in acceptable timeframes at a predefined capacity during an interruption.”

In practice, there is talk of disaster recovery and incident management, in a comprehensive approach that establishes a series of activities that an organization can initiate to respond to an incident, recover from the situation and resume business operations at an acceptable level. Generally, these actions have to do with infrastructure in one way or another.

Business continuity today

Before IT was simpler, infrastructure was located in one or more datacenters.

Now, we don’t even know where it is, beyond a series of intentionally fuzzy concepts, but what we do know is that neither the hardware is ours, nor the technology is ours, nor the technicians, nor the networks are ours. Only the data (supposedly).

What does business resilience mean?

It is funny that this term has become trendy, when the basic concept of the creation of the Internet was resilience. It means neither more nor less than that it is not a matter of hitting a wall and getting up, but that of accepting mistakes and moving forward, in other words, being a little more elegant and flexible when facing adversity.

Resilience and business continuity

In these liquid times, where everything flows, you have to be flexible and change the paradigm, that is why there is no longer talk of monitoring but of observability, because that of the all-seeing eye is a bit illusory, there is too much to see. Old models don’t work.

It’s not a scalability problem (or at least it’s not just a scalability problem), it’s a paradigm shift problem.

Let’s solve the problem using the problem

Today all organizations are somehow dissolved in the cloud. They mix their own infrastructure with the cloud, they mix their own technology with the cloud, they mix their own data with the cloud. Why not mix observability with cloud?

I’m not talking about using a SaaS monitoring tool, that would be to continue the previous paradigm, I’m talking about our tool dissolving in the cloud, that our operational knowledge dissolves in the cloud and that the resilience of our organization is based on that, on being in the cloud.

As in the beginnings of the internet, you may cut off a hydra’s head, but the rest keeps biting, and soon, it will grow back.

Being able to do something like this is not about purchasing one or more tools, hiring one or more services, no, that would be staying as usual.

Tip: the F of FMS in Pandora FMS, means Flexible. Find out why.

Resilience, business continuity and cloud

The first step should be to accept that you cannot be in control of everything. Your business is alive, do not try to corset it, manage each element as living parts of a whole. Different clouds, different applications, different work teams, a single technology to unite them all? Isn’t it tempting?

Talk to your teams, they probably have their own opinion on the subject, why not integrate their expertise into a joint solution? The key is not to choose a solution, but a solution of solutions, something that allows you to integrate the different needs, something flexible that you do not need to be in control of, just take a look, just have a complete map, so that whatever happens, you can move forward, that’s what continuity is all about.

Some tips on business continuity, resilience and cloud

Why scale a service instead of managing on-demand items?

A service is useful insofar as it provides customers with the benefits they need from it. It is therefore essential to guarantee its operation and operability.

Sizing a service is important to ensure its profitability and quality. When sizing a service, the amount of resources needed, such as personnel, equipment, and technology, can be determined to meet the demand efficiently and effectively. That way, you will avoid problems such as long waiting times, overwork for staff, low quality of service or loss of customers due to poor attention.

In addition, sizing a service will allow you to anticipate possible peaks in demand and adapt the capacity appropriately to respond satisfactorily to the needs of customers and contribute to their satisfaction. Likewise, it also helps you optimize operating costs and maximize service profitability.

Why find the perfect tool if you already have it in-house?

Integrate your internal solution with other external tools that can enhance its functionality. Before embarking on a never-ending quest, consider what you already have at home. If you have an internal solution that works well for your business, why not make the most of it by integrating it with other external tools?

For example, imagine that you already have an internal customer management (CRM) system that adapts to the specific needs of your company. Have you thought about integrating it with digital marketing tools like HubSpot or Salesforce Marketing Cloud? This integration could take your marketing strategies to the next level, automating processes and optimizing your campaigns in a way you never imagined before.

And if you’re using an internal project management system to keep everything in order, why not consider incorporating online collaboration tools like Trello or Asana? These platforms can complement your existing system with additional features, such as Kanban boards and task tracking, making your team’s life easier and more efficient.

Also, let’s not forget IT service management. If you already have an internal ITSM (IT Service Management) solution, such as Pandora ITSM, why not integrate it with other external tools that can enhance its functionality? Integrating Pandora ITSM with monitoring tools like Pandora FMS can provide a more complete and proactive view of your IT infrastructure, allowing you to identify and solve issues before they impact your services and users.

The key is to make the most of what you already have and further enhance it by integrating it with other tools that can complement it. Have you tried this strategy before? It could be the key to streamlining your operations and taking your business to the next level.

Why force your team to work in a specific way?

Incorporate other equipment and integrate it into your team (it may be easier than you imagine, and much cheaper).

The imposition of a single work method can limit the creativity and productivity of the team. Instead, consider incorporating new teams and work methods, seamlessly integrating them into your organization. Not only can this encourage innovation and collaboration, but it can also result in greater efficiency and cost reduction. Have you explored the option of incorporating new teams and work methods into your organization? Integrating diverse perspectives can be a powerful driver for business growth and success.

Why choose a single cloud if you can integrate several?

The supposed simplicity can be a prison of very high walls, never take your chances on a single supplier or you will depend on it. Use European alternatives to protect yourself from legal and political changes in the future.

Choosing a single cloud provider can offer simplicity in management, but it also carries significant risks, such as over-reliance and vulnerability to legal or political changes. Instead, integrating multiple cloud providers can provide greater flexibility and resilience, thereby reducing the risks associated with relying on a single provider.

Have you considered diversifying your cloud providers to protect your business from potential contingencies? Integrating European alternatives can provide an additional layer of protection and stability in an increasingly complex and changing business environment.

Why choose high availability?

Pandora FMS offers HA on servers, agents and its console for demanding environments ensuring their continuity.

High availability (HA) is a critical component in any company’s infrastructure, especially in environments where service continuity is key. With Pandora FMS, you have the ability to deploy HA to servers, agents, and the console itself, ensuring your systems are always online even in high demand or critical environments.

Imagine a scenario where your system experiences a significant load. In such circumstances, equitable load distribution among several servers becomes crucial. Pandora FMS allows you to make this distribution, which ensures that, in the event of a component failure, the system remains operational without interruptions.

In addition, Pandora FMS modular architecture allows you to work in synergy with other components, assuming the burden of those that may fail. This contributes to creating a fault-resistant infrastructure, where system stability is maintained, even in the face of unforeseen setbacks.

Why centralize if you can distribute?

Choose a flexible tool, such as Pandora FMS.

Centralizing resources may seem like a logical strategy to simplify management, but it can limit the flexibility and resilience of your infrastructure. Instead of locking your assets into a single point of failure, consider distributing your resources strategically to optimize performance and availability across your network.

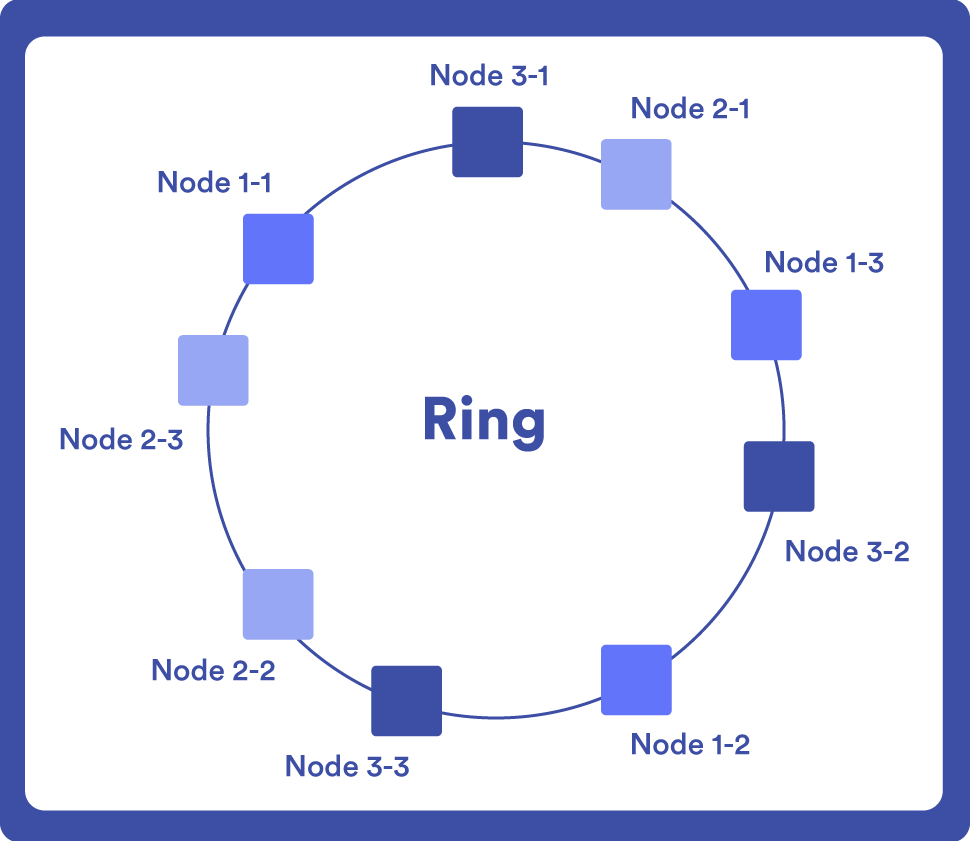

With Pandora FMS, you have the ability to implement distributed monitoring that adapts to the specific needs of your business. This solution allows you to deploy monitoring agents across multiple locations, providing you with full visibility into your infrastructure in real time, no matter how dispersed it is.

By decentralizing monitoring with Pandora FMS, you may proactively identify and solve issues, thus minimizing downtime and maximizing operational efficiency. Have you considered how distributed monitoring with Pandora FMS can improve the management and control of your infrastructure more effectively and efficiently? Its flexibility and adaptability can offer you a strong and customized solution for your IT monitoring needs.

About Version 2

Version 2 is one of the most dynamic IT companies in Asia. The company develops and distributes IT products for Internet and IP-based networks, including communication systems, Internet software, security, network, and media products. Through an extensive network of channels, point of sales, resellers, and partnership companies, Version 2 offers quality products and services which are highly acclaimed in the market. Its customers cover a wide spectrum which include Global 1000 enterprises, regional listed companies, public utilities, Government, a vast number of successful SMEs, and consumers in various Asian cities.

About PandoraFMS

Pandora FMS is a flexible monitoring system, capable of monitoring devices, infrastructures, applications, services and business processes.

Of course, one of the things that Pandora FMS can control is the hard disks of your computers.